Ejabberd Installation and Operation Guide

February 3, 2003

1 Introduction

ejabberd is a Free and Open Source distributed fault-tolerant Jabber

server. It writen mostly in Erlang.

Main features of ejabberd is:

-

Distribution. You can run ejaberd on cluster of machines and all them

will serve one Jabber domain.

- Fault-tolerance. You can setup ejabberd cluster in such way, that all

information required for properly working will be stored permanently on more

then one machine, so if one of them crashed, then all other ones continue

working without any pauses. Also you can replace or add more machines ``on

the fly''.

- Support of JEP-0030

(Service Discovery).

- Support of JEP-0039

(Statistics Gathering).

- Support of xml:lang attribute in many XML elements.

- JUD based on users vCards.

2 Installation

2.1 Installation Requirements

To compile ejabberd, you need following packages:

-

GNU Make;

- GCC;

- libexpat 1.95 or later;

- Erlang/OTP R8B or later.

2.2 Obtaining

Currently no stable version released.

Latest alpha version can be retrieved via CVS. Do following steps:

-

export CVSROOT=:pserver:cvs@www.jabber.ru:/var/spool/cvs

- cvs login

- Enter empty password

- cvs -z3 co ejabberd

2.3 Compilation

./configure

make

TBD

2.4 Starting

... To use more then 1024 connections, you need to set environment

variable ERL_MAX_PORTS:

export ERL_MAX_PORTS=32000

Note that with this value ejabberd will use more memory (approximately 6MB

more)...

erl -name ejabberd -s ejabberd

TBD

3 Configuration

3.1 Initial Configuration

Configuration file is loaded after first start of ejabberd. It consists of

sequence of Erlang terms. Parts of lines after `%' sign are ignored.

Each term is tuple, where first element is name of option, and other are option

values. Note, that after first start all values from this file stored in

database, and in next time they will be APPENDED to existing values. E. g.

if this file will not contain ``host'' definition, then old value will be

used.

To override old values following lines can be added in config:

override_global.

override_local.

override_acls.

With this lines old global or local options or ACLs will be removed before

adding new ones.

3.1.1 Host Name

Option hostname defines name of Jabber domain that ejabberd

serves. E. g. to use jabber.org domain add following line in config:

{host, "jabber.org"}.

3.1.2 Access Rules

Access control in ejabberd is done via Access Control Lists (ACL).

Declaration of ACL in config file have following syntax:

{acl, <aclname>, {<acltype>, ...}}.

<acltype> can be one of following:

-

all

- Matches all JIDs. Example:

{acl, all, all}.

- {user, <username>}

- Matches local user with name

<username>. Example:

{acl, admin, {user, "aleksey"}}.

- {user, <username>, <server>}

- Matches user with JID

<username>@<server> and any resource. Example:

{acl, admin, {user, "aleksey", "jabber.ru"}}.

- {server, <server>}

- Matches any JID from server

<server>. Example:

{acl, jabberorg, {server, "jabber.org"}}.

- {user_regexp, <regexp>}

- Matches local user with name that

mathes <regexp>. Example:

{acl, tests, {user, "^test[0-9]*$"}}.

- {user_regexp, <regexp>, <server>}

- Matches user with name

that mathes <regexp> and from server <server>. Example:

{acl, tests, {user, "^test", "localhost"}}.

- {server_regexp, <regexp>}

- Matches any JID from server that

matches <regexp>. Example:

{acl, icq, {server, "^icq\\."}}.

- {node_regexp, <user_regexp>, <server_regexp>}

- Matches user

with name that mathes <user_regexp> and from server that matches

<server_regexp>. Example:

{acl, aleksey, {node_regexp, "^aleksey", "^jabber.(ru|org)$"}}.

- {user_glob, <glob>}

-

- {user_glob, <glob>, <server>}

-

- {server_glob, <glob>}

-

- {node_glob, <user_glob>, <server_glob>}

- This is same as

above, but use shell glob patterns instead of regexp. This patterns can have

following special characters:

-

*

- matches any string including the null string.

- ?

- matches any single character.

- [...]

- matches any of the enclosed characters. Character

ranges are specified by a pair of characters separated by a `-'.

If the first character after `[' is a `!', then any

character not enclosed is matched.

Following ACLs pre-defined:

-

all

- Matches all JIDs.

- none

- Matches none JIDs.

Allowing or denying of different services is like this:

{access, <accessname>, [{allow, <aclname>},

{deny, <aclname>},

...

]}.

When JID is checked to have access to <accessname>, server

sequentially checks if this JID in one of the ACLs that are second elements in

each tuple in list. If one of them matched, then returned first element of

matched tuple. Else returned ``deny''.

Example:

{access, configure, [{allow, admin}]}.

{access, something, [{deny, badmans},

{allow, all}]}.

Following access rules pre-defined:

-

all

- Always return ``allow''

- none

- Always return ``deny''

3.1.3 Listened Sockets

Option listen defines list of listened sockets and what services

runned on them. Each element of list is a tuple with following elements:

-

Port number;

- Module that serves this port;

- Function in this module that starts connection (likely will be removed);

- Options to this module.

Currently three modules implemented:

-

ejabberd_c2s

- This module serves C2S connections.

Following options defined:

-

{access, <access rule>}

- This option defines access of users

to this C2S port. Default value is ``all''.

- ejabberd_s2s_in

- This module serves incoming S2S connections.

- ejabberd_service

- This module serves connections to Jabber

services (i. e. that use jabber:component:accept namespace).

For example, following configuration defines that C2S connections listened on

port 5222 and denied for user ``bad'', S2S on port 5269 and that

service conference.jabber.org must be connected to port 8888 with

password ``secret''.

{acl, blocked, {user, "bad"}}.

{access, c2s, [{deny, blocked},

{allow, all}]}.

{listen, [{5222, ejabberd_c2s, start, [{access, c2s}]},

{5269, ejabberd_s2s_in, start, []},

{8888, ejabberd_service, start,

[{host, "conference.jabber.org", [{password, "secret"}]}]}

]}.

3.1.4 Modules

Option modules defines list of modules that will be loaded after

ejabberd startup. Each list element is a tuple where first element is a

name of module and second is list of options to this module. See

section A for detailed information on each module.

Example:

{modules, [

{mod_register, []},

{mod_roster, []},

{mod_configure, []},

{mod_disco, []},

{mod_stats, []},

{mod_vcard, []},

{mod_offline, []},

{mod_echo, [{host, "echo.localhost"}]},

{mod_private, []},

{mod_time, [{iqdisc, no_queue}]},

{mod_version, []}

]}.

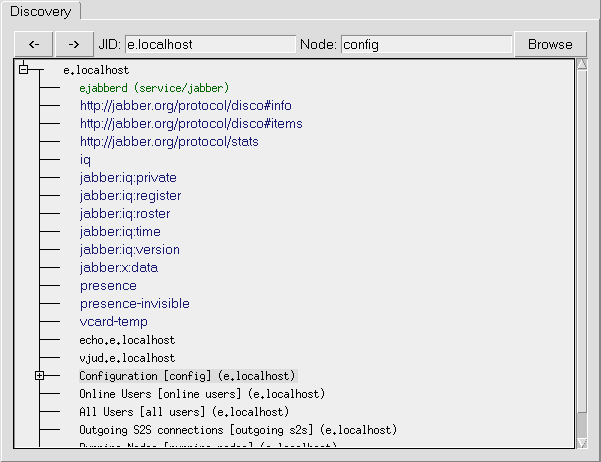

3.2 Online Configuration and Monitoring

To use facility of online reconfiguration of ejabberd needed to have

mod_configure loaded (see section A.4). Also highly

recommended to load mod_disco (see section A.5), because

mod_configure highly integrates with it. Also recommended to use disco- and

xdata-capable client

(Tkabber

developed synchronously with ejabberd, its CVS version use most of

ejabberd features).

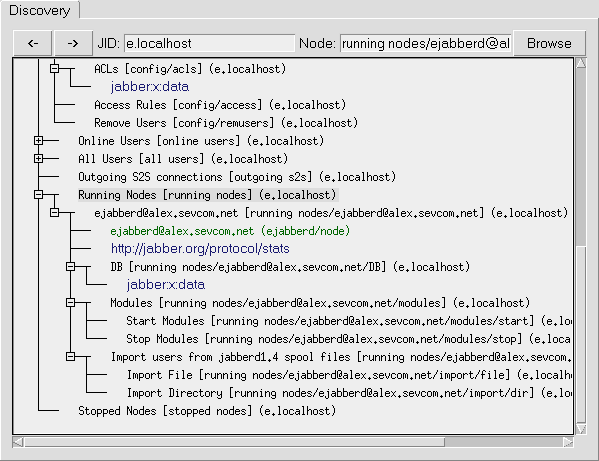

On disco query ejabberd returns following items:

-

Identity of server.

- List of features, including defined namespaces.

- List of JIDs from route table.

- List of disco-nodes described in following subsections.

Figure 1: Tkabber Discovery window

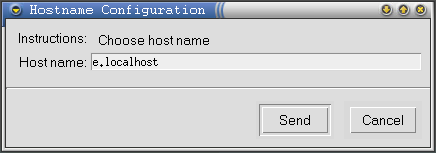

3.2.1 Node config: Global Configuration

Under this node exists following nodes:

Node config/hostname

Via jabber:x:data queries to this node possible to change host name of

this ejabberd server. (See figure 2) (Currently will work

correctly only after restart)

Figure 2: Editing of hostname

Node config/acls

Via jabber:x:data queries to this node possible to edit ACLs list. (See

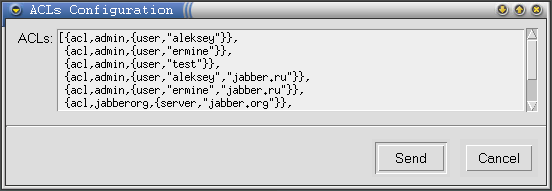

figure 3)

Figure 3: Editing of ACLs

Node config/access

Via jabber:x:data queries to this node possible to edit access rules.

Node config/remusers

Via jabber:x:data queries to this node possible to remove users. If

removed user is online, then he will be disconnected. Also user-related data

(e.g. his roster) is removed (but appropriate module must be loaded).

3.2.2 Node online users: List of Online Users

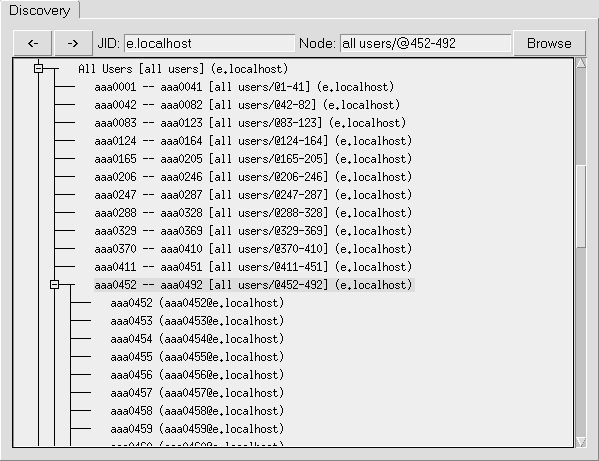

3.2.3 Node all users: List of Registered User

Figure 4: Discovery all users

3.2.4 Node outgoing s2s: List of Outgoing S2S connections

3.2.5 Node running nodes: List of Running ejabberd Nodes

Figure 5: Discovery running nodes

3.2.6 Node stopped nodes: List of Stopped Nodes

TBD

4 Distribution

4.1 How it works

Jabber domain is served by one or more ejabberd nodes. This nodes can be

runned on different machines that can be connected via network. They all must

have access to connect to port 4369 of all another nodes, and must have same

magic cookie (see Erlang/OTP documentation, in short file

~ejabberd/.erlang.cookie must be the same on all nodes). This is

needed because all nodes exchange information about connected users, S2S

connections, registered services, etc...

Each ejabberd node run following modules:

-

router;

- local router.

- session manager;

- S2S manager;

4.1.1 Router

This module is the main router of Jabber packets on each node. It route

them based on their destanations domains. It have two tables: local and global

routes. First, domain of packet destination searched in local table, and if it

finded, then packet routed to appropriate process. If no, then it searched in

global table, and routed to appropriate ejabberd node or process. If it not

exists in both tables, then it sended to S2S manager.

4.1.2 Local Router

This module route packets which have destination domain equal to this server

name. If destination JID have node, then it routed to session manager, else it

processed depending on it content.

4.1.3 Session Manager

This module route packets to local users. It search to what user resource

packet must be sended via presence table. If this reseouce connected to this

node, it routed to C2S process, if it connected via another node, then packet

sended to session manager on it.

4.1.4 S2S Manager

This module route packets to another Jabber servers. First, it check if

already exists opened S2S connection from domain of packet source to domain of

destination. If it opened on another node, then it routed to S2S manager on

that node, if it opened on this node, then it routed to process that serve this

connection, and if this connection not exists, then it opened and registered.

A Built-in Modules

A.1 Common Options

Following options used by many modules, so they described in separate section.

A.1.1 Option iqdisc

Many modules define handlers for processing IQ queries of different namespaces

to this server or to user (e. g. to myjabber.org or to

user@myjabber.org). This option defines processing discipline of this

queries. Possible values are:

-

no_queue

- All queries of namespace with this processing

discipline processed immediately. This also means that no other packets can

be processed until finished this. Hence this discipline is not recommended

if processing of query can take relative many time.

- one_queue

- In this case created separate queue for processing

IQ queries of namespace with this discipline, and processing of this queue

done in parallel with processing of other packets. This discipline is most

recommended.

- parallel

- In this case for all packets of namespace with this

discipline spawned separate Erlang process, so all this packets processed in

parallel. Although spawning of Erlang process have relative low cost, this

can broke server normal work, because Erlang have limit of 32000 processes.

Example:

{modules, [

...

{mod_time, [{iqdisc, no_queue}]},

...

]}.

A.1.2 Option host

Some modules may act as services, and wants to have different domain name.

This option explicitly defines this name.

Example:

{modules, [

...

{mod_echo, [{host, "echo.myjabber.org"}]},

...

]}.

A.2 mod_register

A.3 mod_roster

A.4 mod_configure

A.5 mod_disco

A.6 mod_stats

This module adds support of

JEP-0039 (Statistics Gathering).

Options:

-

iqdisc

- http://jabber.org/protocol/stats IQ queries

processing discipline.

TBD about access.

A.7 mod_vcard

A.8 mod_offline

A.9 mod_echo

A.10 mod_private

This module adds support of

JEP-0049 (Private XML

Storage).

Options:

-

iqdisc

- jabber:iq:private IQ queries processing discipline.

A.11 mod_time

This module answers UTC time on jabber:iq:time queries.

Options:

-

iqdisc

- jabber:iq:time IQ queries processing discipline.

A.12 mod_version

This module answers ejabberd version on jabber:iq:version queries.

Options:

-

iqdisc

- jabber:iq:version IQ queries processing discipline.

B I18n/L10n

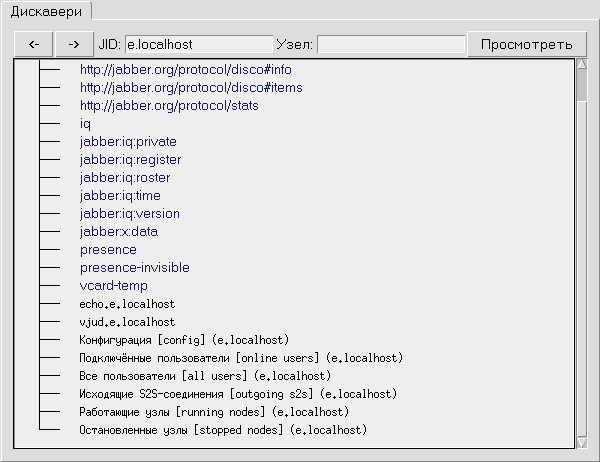

Many modules supports xml:lang attribute inside IQ queries. E. g.

on figure 6 (compare with figure 1) showed reply

on following query:

<iq id='5'

to='e.localhost'

type='get'>

<query xmlns='http://jabber.org/protocol/disco#items'

xml:lang='ru'/>

</iq>

Figure 6: Discovery result when xml:lang='ru'

This document was translated from LATEX by

HEVEA.